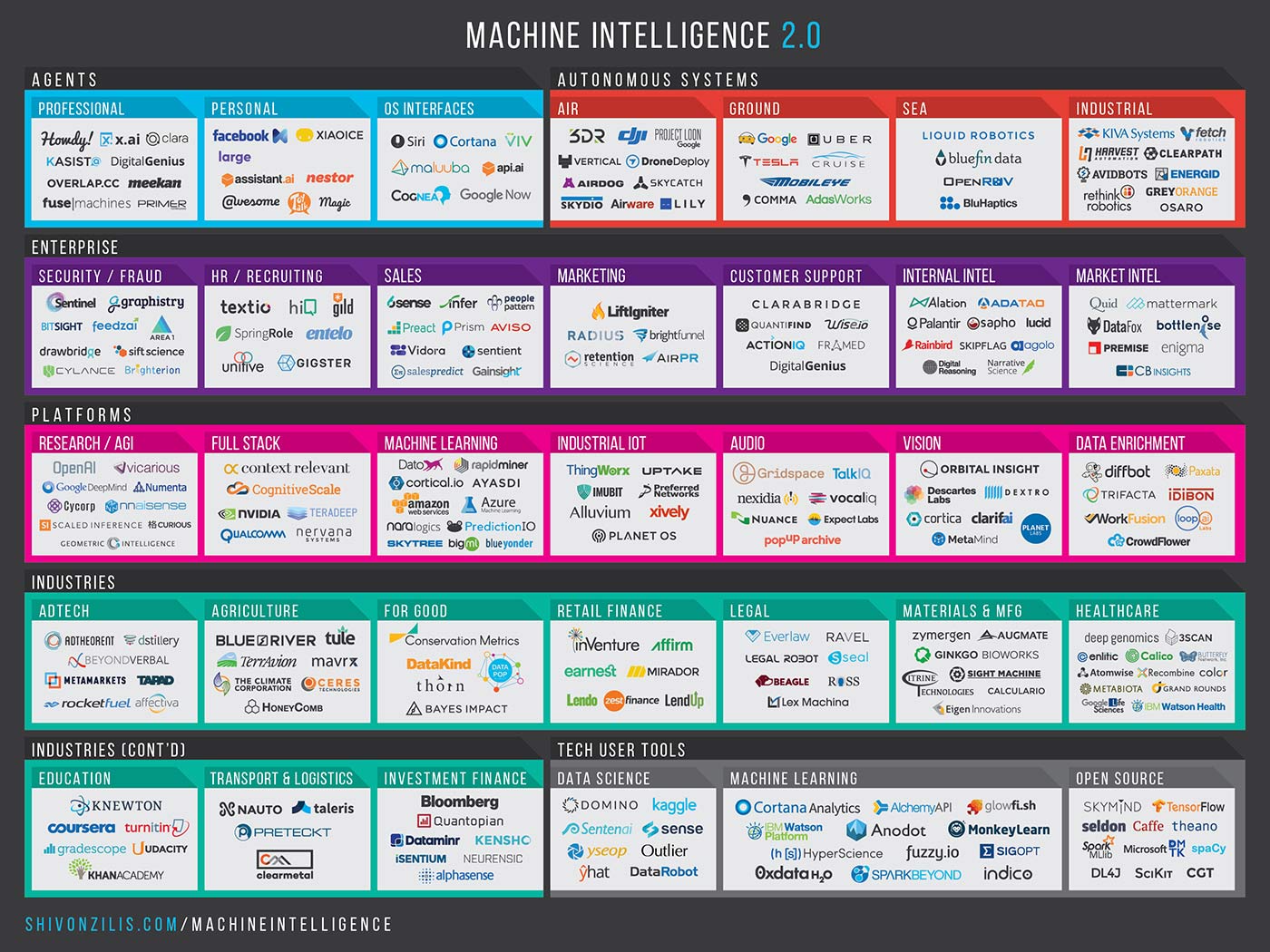

My friend Shivon Zilis from Bloomberg Beta have just updated her annual machine learning chat. Here is the blog post published by O'Reilly.

Thursday, December 17, 2015

Wednesday, December 16, 2015

Ilya Sutskever heads OpenAI

A new non-profit research initiative for AI, OpenAI was announced this week. OpenAI is headed by Ilya Sutskever, one of the most famous deep learning researchers. Based on Ilya's background it seems like they will focus on the first stage on deep learning technologies.

Monday, December 14, 2015

A quick introduction to speech recognition and natural language processing with deep learning

I asked my colleague and friend Yishay Carmiel, head of Spoken Innovation Labs to give me a quick training about state of the art speech recognition and NLP for deep learning.

I asked my colleague and friend Yishay Carmiel, head of Spoken Innovation Labs to give me a quick training about state of the art speech recognition and NLP for deep learning.

Speech Recognition

In terms of SW implementation, we can see all the big companies are building a personal assistant; its core technology is using speech recognition. Google Now, Microsoft's Cortana, Apple’s Siri and Amazon’s Echo.

Speech recognition is a field that has been in research for more than 40 years. Building a Speech recognition system is a huge algorithmic and engineering task. It is very hard to point on 3 specific research papers that can cover the whole topic. A nice paper I can refer to is "Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups", this is a research paper from 2012 published by 4 different research groups on the huge impact DL has made on speech recognition. Currently this is “old stuff” the technology is moving forward in a blazing paste. I also think that a book by top Microsoft Researchers “DEEP LEARNING: Methods and Applications” is a good place to understand what’s going on.

I do not know on any good video lectures on deep learning for voice, there might be, but to be honest I have not looked for that for a long time.

There are 2 well known open sources in speech recognition:

(i) Sphinx – Open source by CMU, quite easy to work with and start implementing speech recognition. However as far as I know, the downside is that is does not have Deep Learning support – Only GMM based models.

(ii) Kaldi – By far the most advanced open source in this field. Have al the latest technologies including: State of the art Deep learning models, WFST based search, advanced language model building techniques and latest speaker adaption techniques. Kaldi is not a plug and play program, it takes a lot of time to have a good understanding of how to use it and adapt it to your needs.

Natural Language Processing:

Natural Language Processing is a broad field with a lot of applications. So it is hard to point on a specific DL approach. Right now word representation and document/sentence representation using RNN are a secret sauce for building better models. In addition, a lot of NLP tasks are based on some kind of a sequence to sequence mapping so LSTM techniques give a nice boost to that. I also think that memory networks would have an interesting impact in the future.

DL is a tool to bring better NLP technologies, so I assume the big companies are applying these techniques to improve their product quality. A good example will be Google semantic search and IBM Watson.

To understand the impact I would refer to “Distributed Representations of Words and Phrases and their Compositionality” to understand the impact of word2vec and “Sequence to Sequence Learning with Neural Networks” to understand the impact of LSTM based models.

There are very good video lectures by Stanford: “CS224d: Deep Learning for Natural Language Processing”. This gives a good coverage of the field.

Since NLP is a broad field with variety of application, its very hard to point for a single source. I think that Google’s TensorFlow offers a variety of interesting stuff, although it is not easy to work with. For word/document representation there is Google’s word2vec code, gensim and Stanford's Glove.

Yishay Carmiel Short Bio Yishay is the head of Spoken Labs, a big data analytics unit that implements bleeding edge deep learning and machine learning technologies for speech recognition, computer vision and data analysis. He has 15 years' experience as an algorithm scientist and technology leader and has worked on building large scale machine learning algorithms and served as a deep learning expert. Yishay and his team are working on bleeding edge technologies in artificial intelligence, deep learning and large scale data analysis.

rd.io acquired by Pandora

Just head that rd.io was acquired by Pandora (a month ago.. but news traveled slow). I have friends at both sides of the fence ! rd.io was not in a good shape but at least now they are in good hands.

Saturday, December 12, 2015

Belief Propagation in GraphLab Create

As you may know I really like probabilistic graphical models, and my PhD Thesis was focused on Gaussian Belief Propagation. My Colleauge Alon Palombo have recently implemented a graphical model inference toolkit on top of GraphLab Create. We are looking for academic researchers or companies who would like to try it out.

Here is a quick example for graph coloring using BP:

[8 rows x 4 columns]

Another cool example from Pearl's paper:

What is the probability that there is a burglary given that Ali calls? 0.0162 (slide 10)

[? rows x 3 columns]

Note: Only the head of the SFrame is printed. This SFrame is lazily evaluated.

You can use len(sf) to force materialization.

What about if Veli also calls right after Ali hangs up? (0.29)

[? rows x 3 columns]

Note: Only the head of the SFrame is printed. This SFrame is lazily evaluated.

You can use len(sf) to force materialization.

Here is a quick example for graph coloring using BP:

In [2]:

cube = gl.SGraph()

edge_potential = [

[1e-08, 1],

[1, 1e-08],

]

variables = gl.SFrame({'var' : range(8),

'prior' : [[1, 0]] + [[1, 1]] * 7})

cube = cube.add_vertices(variables, 'var')

edges = gl.SFrame({'src' : [0, 0, 0, 1, 1, 2, 2, 3, 4, 4, 5, 6],

'dst' : [1, 2, 4, 3, 5, 3, 6, 7, 5, 6, 7, 7],

'potential' : [edge_potential] * 12})

cube = cube.add_edges(edges, src_field='src', dst_field='dst')

cube.show(vlabel='__id')

In [3]:

coloring = bp.belief_propagation(graph=cube,

prior='prior',

edge_potential='potential',

num_iterations=10,

method='sum',

convergence_threshold=0.01).unpack('marginal')

In [4]:

coloring['color'] = coloring.apply(lambda x: x['marginal.0'] < x['marginal.1'])

In [5]:

Out[5]:

| __id | marginal.0 | marginal.1 | color |

|---|---|---|---|

| 5 | 1.0 | 1.00000003e-24 | 0 |

| 7 | 1.00000003e-24 | 1.0 | 1 |

| 0 | 1.0 | 0.0 | 0 |

| 2 | 1.00000002e-24 | 1.0 | 1 |

| 6 | 1.0 | 1.00000003e-24 | 0 |

| 3 | 1.0 | 1.00000003e-24 | 0 |

| 1 | 1.00000002e-24 | 1.0 | 1 |

| 4 | 1.00000002e-24 | 1.0 | 1 |

In [7]:

cube.show(vlabel='__id', highlight=cube.vertices[coloring['color']]['__id'])

Another cool example from Pearl's paper:

Burglar Alarm Example

What is the probability that there is a burglary given that Ali calls? 0.0162 (slide 10)

In [14]:

#Priors

pr_bur = [0.001, 0.999]

pr_earth = [0.002, 0.998]

pr_bur_earth = [1, 1, 1, 1]

pr_alarm = [1, 1]

pr_ali = [1, 0] #Ali calls

pr_veli = [1, 1]

#Edge potentials

Pbeb = [[1, 0],

[1, 0],

[0, 1],

[0, 1]]

Pbee = [[1, 0],

[0, 1],

[1, 0],

[0, 1]]

Pabe = [[0.95, 0.94, 0.29, 0.001],

[0.05, 0.06, 0.71, 0.999]]

Paa = [[0.9, 0.05],

[0.1, 0.95]]

Pva = [[0.7, 0.01],

[0.3, 0.99]]

vertices = gl.SFrame({'id' : ['Burglary', 'Earthquake', 'B_E', 'Alarm', 'Ali Calls', 'Veli Calls'],

'prior' : [pr_bur, pr_earth, pr_bur_earth, pr_alarm, pr_ali, pr_veli]})

edges = gl.SFrame({'src_id' : ['Burglary', 'Earthquake', 'B_E', 'Alarm', 'Alarm'],

'dst_id' : ['B_E', 'B_E', 'Alarm', 'Ali Calls', 'Veli Calls'],

'potential' : [Pbeb, Pbee, Pabe, Paa, Pva]})

g = gl.SGraph()

g = g.add_vertices(vertices, 'id')

g = g.add_edges(edges,src_field='src_id', dst_field='dst_id')

g.show(vlabel='__id', arrows=True)

In [15]:

marginals = bp.belief_propagation(graph=g,

prior='prior',

edge_potential='potential',

num_iterations=10,

method='sum',

convergence_threshold=0.01).unpack('marginal')

#Filtering out the dummy variable's values to print nicely

marginals[['__id', 'marginal.0', 'marginal.1']].filter_by(['B_E'], '__id', exclude=True)

Out[15]:

| __id | marginal.0 | marginal.1 |

|---|---|---|

| Veli Calls | 0.0399720211419 | 0.960027978858 |

| Ali Calls | 1.0 | 0.0 |

| Alarm | 0.0434377117999 | 0.9565622882 |

| Burglary | 0.0162837299468 | 0.983716270053 |

| Earthquake | 0.0113949687738 | 0.988605031226 |

Note: Only the head of the SFrame is printed. This SFrame is lazily evaluated.

You can use len(sf) to force materialization.

What about if Veli also calls right after Ali hangs up? (0.29)

In [16]:

pr_veli = [1, 0] #Veli calls

vertices = gl.SFrame({'id' : ['Burglary', 'Earthquake', 'B_E', 'Alarm', 'Ali Calls', 'Veli Calls'],

'prior' : [pr_bur, pr_earth, pr_bur_earth, pr_alarm, pr_ali, pr_veli]})

edges = gl.SFrame({'src_id' : ['Burglary', 'Earthquake', 'B_E', 'Alarm', 'Alarm'],

'dst_id' : ['B_E', 'B_E', 'Alarm', 'Ali Calls', 'Veli Calls'],

'potential' : [Pbeb, Pbee, Pabe, Paa, Pva]})

g = gl.SGraph()

g = g.add_vertices(vertices, 'id')

g = g.add_edges(edges,src_field='src_id', dst_field='dst_id')

In [17]:

marginals = bp.belief_propagation(graph=g,

prior='prior',

edge_potential='potential',

num_iterations=10,

method='sum',

convergence_threshold=0.01).unpack('marginal')

#Filtering out the dummy variable's values to print nicely

marginals[['__id', 'marginal.0', 'marginal.1']].filter_by(['B_E'], '__id', exclude=True)

PROGRESS: Iteration 1: Sum of l2 change in messages 6.64879 PROGRESS: Iteration 2: Sum of l2 change in messages 7.15545 PROGRESS: Iteration 3: Sum of l2 change in messages 0

Out[17]:

| __id | marginal.0 | marginal.1 |

|---|---|---|

| Veli Calls | 1.0 | 0.0 |

| Ali Calls | 1.0 | 0.0 |

| Alarm | 0.760692038863 | 0.239307961137 |

| Burglary | 0.284171835364 | 0.715828164636 |

| Earthquake | 0.176066838405 | 0.823933161595 |

Note: Only the head of the SFrame is printed. This SFrame is lazily evaluated.

You can use len(sf) to force materialization.

Subscribe to:

Comments (Atom)